Begin with Tensorflow's "Hello World"!

A beginner tutorial for your journey with Tensorflow

Table of contents

- What is Tensorflow?

- Why Tensorflow?

- What does TensorFlow do?

- How does Tensorflow Work?

- What is TensorFlow used for? What can you do with TensorFlow?

- Setting up the requirements

- 1. How does Tensorflow Work?

- Installing Tensorflow

- Importing TensorFlow

- tf.function and AutoGraph

- 3. What do mean by a Tensor?

- Why Tensors?

- Variables

- Operations

By the end of this tutorial, you will learn about:

- How does Tensorflow work

- Building a Graph

- Meaning of Tensor

- Defining multidimensional arrays using TensorFlow

- How TensorFlow handles Variables.

- What are these Placeholders and what do they do?

- Learn Operations using TensorFlow.

Firstly, let's begin answering some common questions!

What is Tensorflow?

In 2015, Google released its own open-source machine learning tool called Tensorflow. It can support deep learning, neural networks, and other numerical computations and is considered the most popular AI engine today.

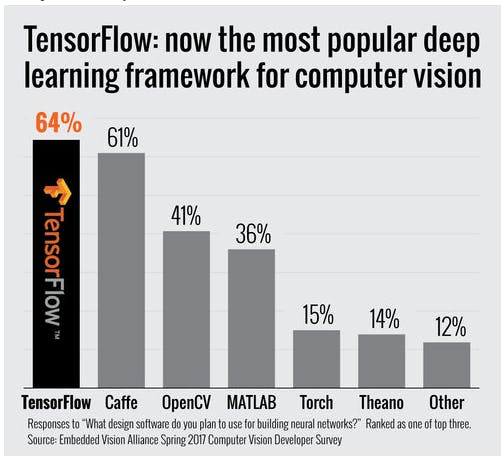

Why Tensorflow?

It is a technology that makes it faster to implement Machine Learning Techniques. Right from Collection of Data, serving in predictions, and then furnishing the results- Tensorflow is there!

What does TensorFlow do?

It can train and run deep neural networks for things like handwritten digit classification, image recognition, word embeddings, and natural language processing (NLP). The code contained in its software libraries can be added to any application to help it learn these tasks.

How does Tensorflow Work?

TensorFlow combines various machine learning and deep learning (or neural networking) models and algorithms and makes them useful by way of a common interface.

What is TensorFlow used for? What can you do with TensorFlow?

TensorFlow is designed to streamline the process of developing and executing advanced analytics applications for users such as data scientists, statisticians, and predictive modelers.

Setting up the requirements

How to install TensorFlow Full instructions and tutorials are available on tensorflow.org, but here are the basics.

System requirements:

Python 3.7+ pip 19.0 or later (requires manylinux2010 support, and TensorFlow 2 requires a newer version of pip) Ubuntu 16.04 or later (64-bit) macOS 10.12.6 (Sierra) or later (64-bit) (no GPU support) Windows 7 or later (64-bit)

How to update TensorFlow The pip package manager offers a simple method to upgrade TensorFlow, regardless of the environment.

Prerequisites:

Python 3.6-3.9 installed and configured (check the Python version before starting). TensorFlow 2 installed.

A very crisp and clear idea of what Tensorflow is given here

1. How does Tensorflow Work?

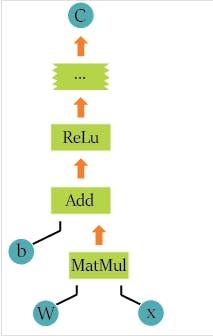

TensorFlow defines computations as Graphs, and these are made with operations (also know as “ops”). So, when we work with TensorFlow, it is the same as defining a series of operations in a Graph.

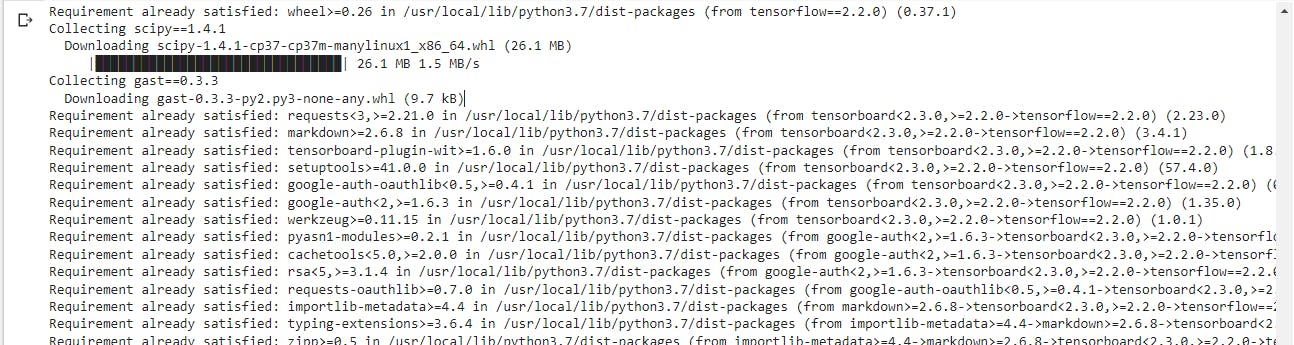

Installing Tensorflow

!pip install grpcio==1.24.3

!pip install tensorflow==2.2.0

You will get something like:

Importing TensorFlow

To use TensorFlow, we need to import the library. We imported it and optionally gave it the name "tf", so the modules can be accessed by tf.module-name:

import tensorflow as tf

if not tf.__version__ == '2.2.0':

print(tf.__version__)

raise ValueError('please upgrade to TensorFlow 2.2.0, or restart your Kernel (Kernel->Restart & Clear Output)')

IMPORTANT! => Please restart the kernel by clicking on "Kernel" -> "Restart and Clear Outout" and wait until all output disapears. Then your changes will be picked up.

After you have restarted the kernel, re-run the previous cell to import tensorflow and then continue to the next section.

tf.function and AutoGraph

Now we call the TensorFlow functions that construct new tf.Operation and tf.Tensor objects. As mentioned, each tf.Operation is a node and each tf.Tensor is an edge in the graph.

Lets add 2 constants to our graph. For example, calling tf.constant([2], name = 'constant_a') adds a single tf.Operation to the default graph. This operation produces the value 2, and returns a tf.Tensor that represents the value of the constant.\ Notice: tf.constant([2], name="constant_a") creates a new tf.Operation named "constant_a" and returns a tf.Tensor named "constant_a:0".

a=tf.constant([2],name='constant_a')

b=tf.constant([3],name='constant_b')

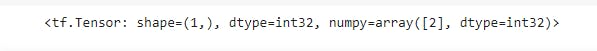

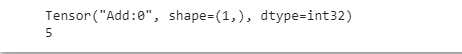

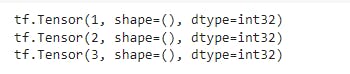

Now let us look at tensor a

a

As you can see, it shows the name, shape, and type of the TensorFlow.

Now let us see by running the following code

As you can see, it shows the name, shape, and type of the TensorFlow.

Now let us see by running the following code

tf.print(a.numpy()[0])

So, this gives the output as

Let's try to explore something about functions in Tensorflow

@tf.function

def add(a,b):

c=tf.add(a,b)

print(c)

return c

Annotating the python functions with tf.function uses TensorFlow Autograph to create a TensorFlow static execution graph for the function. tf.function annotation tells TensorFlow Autograph to transform function add into TensorFlow control flow, which then defines the TensorFlow static execution graph.

result=add(a,b)

tf.print(result[0])

And your result is:

Even this silly example of adding 2 constants to reach a simple result defines the basis of TensorFlow. Define your operations (In this case our constants and tf.add), define a Python function named add and decorate it with using the tf.function annotator

Even this silly example of adding 2 constants to reach a simple result defines the basis of TensorFlow. Define your operations (In this case our constants and tf.add), define a Python function named add and decorate it with using the tf.function annotator

3. What do mean by a Tensor?

In TensorFlow all data is passed between operations in a computation graph, and these are passed in the form of Tensors, hence the name of TensorFlow.

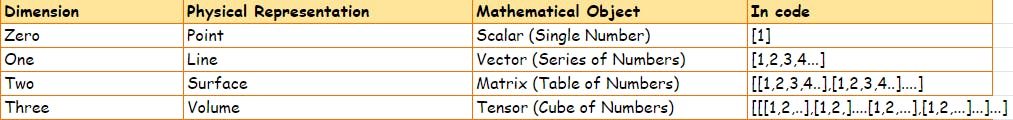

Talking about the concept of Dimension, In simple terms, we have:

Zero Dimension: Single object

One Dimension: Along the line

Two Dimensions: Along a series of lines (Surface)

Three Dimensions: Along a series of surfaces (Volume)

Four Dimensions: Along a series of volumes (Hyperspace)

On summarizing,

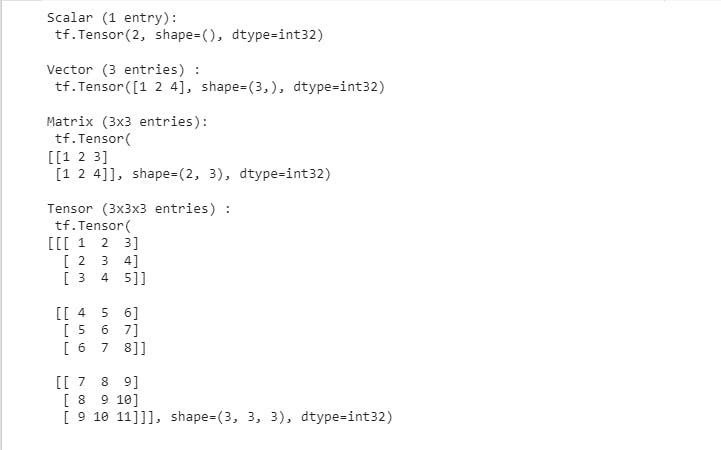

Defining multi-dimensional arrays using TensorFlow

Now let us see how do we create these type of arrays using TensorFlow

Scalar=tf.constant(2)

Vector=tf.constant([1,2,4])

Matrix=tf.constant([[1,2,3],[1,2,4]])

Tensor = tf.constant( [ [[1,2,3],[2,3,4],[3,4,5]] , [[4,5,6],[5,6,7],[6,7,8]] , [[7,8,9],[8,9,10],[9,10,11]] ] )

print ("Scalar (1 entry):\n %s \n" % Scalar)

print ("Vector (3 entries) :\n %s \n" % Vector)

print ("Matrix (3x3 entries):\n %s \n" % Matrix)

print ("Tensor (3x3x3 entries) :\n %s \n" % Tensor)

This will give us an output:

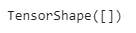

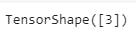

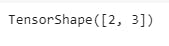

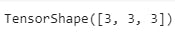

tf.shape is going to return us with the shape of our data structure

Scalar.shape

Vector.shape

Matrix.shape

Tensor.shape

This will give us,

Now, that we are clear with the concept of functions and data structures, we could just play around with them to see what happens

Matrix_one = tf.constant([[1,2,3],[2,3,4],[3,4,5]])

Matrix_two = tf.constant([[2,2,2],[2,2,2],[2,2,2]])

@tf.function

def add():

add_1_operation = tf.add(Matrix_one, Matrix_two)

return add_1_operation

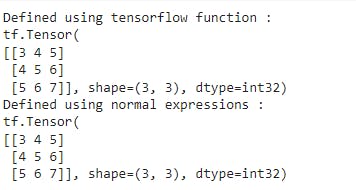

print ("Defined using tensorflow function :")

add_1_operation = add()

print(add_1_operation)

print ("Defined using normal expressions:")

add_2_operation = Matrix_one + Matrix_two

print(add_2_operation)

This would give us:

Why Tensors?

The Tensor structure helps us by giving us the freedom to shape the dataset in the way we want.

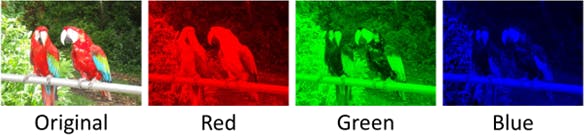

And it is particularly helpful when dealing with images, due to the nature of how information in images is encoded

Thinking about images, it's easy to understand that it has a height and width, so it would make sense to represent the information contained in it with a two-dimensional structure (a matrix)... until you remember that images have colors, and to add information about the colors, we need another dimension, and that's when Tensors become particularly helpful.

Images are encoded into color channels, the image data is represented in each color intensity in a color channel at a given point, the most common one being RGB, which means Red, Blue, and Green. The information contained in an image is the intensity of each channel color in the width and height of the image, just like this:

Variables

We will take a look at how TensorFlow handles variables. First of all, having tensors, why do we need variables? TensorFlow variables are used to share and persist some stats that are manipulated by our program. That is, when you define a variable, TensorFlow adds a tf.Operation to your graph. Then, this operation will store a writable tensor value. So, you can update the value of a variable through each run.

Let's first create a simple counter, by first initializing a variable v that will be increased one unit at a time:

v = tf.Variable(0)

We now create a python method increment_by_one. This method will internally call td.add that takes in two arguments, the reference_variable to update, and assign it to the value_to_update it by.

@tf.function

def increment_by_one(v):

v = tf.add(v,1)

return v

for i in range(3):

v = increment_by_one(v)

print(v)

And this is the output we get:

Operations

Operations are nodes that represent the mathematical operations over the tensors on a graph. These operations can be any kind of function, like add and subtract tensor or maybe an activation function.

tf.constant, tf.matmul, tf.add, tf.nn.sigmoid are some of the operations in TensorFlow. These are like functions in python but operate directly over tensors and each one does a specific thing.

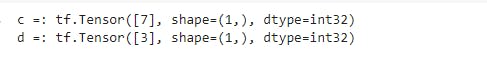

a = tf.constant([5])

b = tf.constant([2])

c = tf.add(a,b)

d = tf.subtract(a,b)

print ('c =: %s' % c)

print ('d =: %s' % d)

Here is the output:

And we are done! Stay tuned for more

(Material and content credit: labs.cognitiveclass.ai/v2/tools/jupyterlab?..)